Knowledge

&

Value Creation

ANALYZING BIBLIOGRAPHIC DATABASES

A NES Blueprint

Types of Problems in Bibliographic Databases

Databases filled with bibliographic records accompanied by links to fulltext provide an un-paralleled service to students, scientists and researchers. These databases, however, often are plagued by errors—both common and rare—that hamper effective search and retrieval of relevant items or accurate linking. It is imperative to have clean and consistently presented data. From a retrieval standpoint, an erroneous or wrongly entered record is a lost entity. Enriched with years of expertise, NES is equipped to tackle these problems through:

1. Bibliographic database analysis; and

2. Rectification of errors and normalization of variations

A database can arrive in any proprietary or other format such as MARC, CDF (comma de-limited format), TDF (tab de-limited format), TAGGED, DUBLIN Core, MS Access, HTML, and XML. NES reviews the data and prepares a specification for cleanup. Problems in databases usually are two-fold:

a) Format errors

b) Data errors

a) Format errors

One of the major errors in MARC format is the improper/wrong positioning of the data elements, which results in disarray of fields. CDF & TDF could miss some fields or position irrelevant data in fields or skip field de-limiters. Tagged format data at times misses the record de-limiters and could storm one field into another. Commonly, all these formats could have empty/null records. With decades of experience, NES is equipped to handle such situations. NES' utilities report the instances mentioned above and suggest the corrective measures to be taken.

b) Data errors

These types of errors constitute a major portion that affects the actual content of the bibliographic records.

Some of the errors are listed below:

i) Missing fields

Essential fields go missing resulting in instances such as

Journal article records without journal title/title/volume/issue information

Journal article records without journal title/title/volume/issue information  Book records without title/publisher

Book records without title/publisher  Records without an individual/institutional author

Records without an individual/institutional author  Records without collation/imprint

Records without collation/imprint  Dissertations without the institution names

Dissertations without the institution names  Conference records without conference details

Conference records without conference details

ii) Wrong representation/misplaced data in various fields

Just alphabets in year field/Year data beginning with anything other than 1 or 2

Just alphabets in year field/Year data beginning with anything other than 1 or 2  Numerals only in the author field

Numerals only in the author field  ISBN/ISSN with lesser/more characters than permitted

ISBN/ISSN with lesser/more characters than permitted  Records without title

Records without title  Repetition of fields such as author repeated in title or vice-versa

Repetition of fields such as author repeated in title or vice-versa  Repetition of terms in index terms/keywords

Repetition of terms in index terms/keywords

iii) Messed up fields

Instances of large strings of stars, question marks, slash, etc.

Instances of large strings of stars, question marks, slash, etc.  Too many space/null characters within the data

Too many space/null characters within the data  Field/Record de-limiters occurring within the running text of an abstract or

Field/Record de-limiters occurring within the running text of an abstract or

full-text

iv) Bad records

Records that are less than 50K in size could have missing fields/data

Records that are less than 50K in size could have missing fields/data  Records with phrases such as Test record, check & testing, etc.

Records with phrases such as Test record, check & testing, etc.

v) Sundry checks performed

Check all entities, foreign-language character sets & non-ASCII characters used in the dataset.

Check all entities, foreign-language character sets & non-ASCII characters used in the dataset.  Examine if large text data fields (abstracts, notes, full-text, etc.) containing tables, paragraphs, indented text are properly formatted.

Examine if large text data fields (abstracts, notes, full-text, etc.) containing tables, paragraphs, indented text are properly formatted.  Evaluate journal titles/publisher names/places for consistency and construct look-up tables to standardize them

Evaluate journal titles/publisher names/places for consistency and construct look-up tables to standardize them  Run utilities to report non-functioning URLs in a database

Run utilities to report non-functioning URLs in a database

vi) To analyze and rectify XML files, NES can

Assist to design, create and modify XSD schema

Assist to design, create and modify XSD schema  Provide solutions to counter discrepancies in the XML data

Provide solutions to counter discrepancies in the XML data  Validate XML files using different schemas

Validate XML files using different schemas

De-duping Bibliographic Records

A common and un-avoidable feature in database files is the occurrence of duplicate records. These records look exactly similar with or without slight variations.

Why do they occur?

Unknown duplication done at the same division due to accidental copying

Unknown duplication done at the same division due to accidental copying

of records in the input tool. Improper bibliographic control—when two divisions of the same

Improper bibliographic control—when two divisions of the same

organization are distributed in different geographic locations. When two or more bibliographic databases are integrated

When two or more bibliographic databases are integrated

NES' COMPARE & COMPOSITE TECHNOLOGY

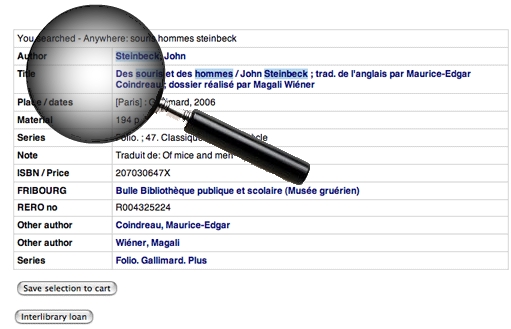

This a dynamic semi-automated process that unifies SIMILAR bibliographic records irrespective of the source of arrival. A multitude of rules have been laid down for the software to compare bibliographic elements pertaining to various publication types.

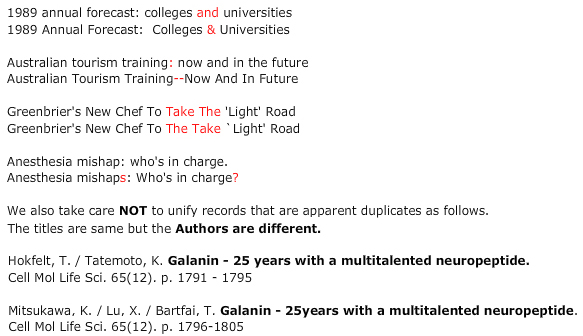

At times, minor variations exist between records from different databases because of typing or other errors. NES goes an extra mile in handling these subtle variations while de-duping them. Some examples follow:

Author Variations

Title Variations

We execute several Quality Checks on the

customers’ publications.

We execute several Quality Checks on the

customers’ publications. We have experience in performing Quality

Assurance tests on

We have experience in performing Quality

Assurance tests on

databases/publications using specifications, support files and other

specially developed utilities. With nearly ten years of experience in the

field, we have developed scores of program

utilities to check quality and generate reports on

erroneous or

With nearly ten years of experience in the

field, we have developed scores of program

utilities to check quality and generate reports on

erroneous or

misplaced contentin databases and publications.

Title Variations